No, ER misdiagnoses are not killing 250,000 per year

You may have heard the shocking headline this week that 250,000 people die every year in the US due to misdiagnosis in the ER.

You may be even more shocked to know that this statistic is extrapolated from the death of… just one man. In a Canadian ER. Over a decade ago.

These numbers are the results of a report published by the Agency for Health Care Research and Quality (AHRQ), reported in the NY Times this week.

But as it turns out, the methods used to arrive at these estimates are very, very bad. Let’s go through them and see why…

One death, or 250,000?

The report goes through a lot of different outcomes and statistics, but let’s focus on the most eye-catching one: an estimated 250,000 deaths each year in the US due to misdiagnosis in the ER.

The report performs a review of the literature on this topic, and they find one high quality study (methods are strong), and some other not as high quality studies.

The high quality study looked at 503 patients discharged from two Canadian ERs in the late 2000s. In the study, they called people 14 days after discharge to see how they did (or if they were still in the hospital, they looked at their medical charts).

The study found that of the 503 patients, 1 patient had an unexpected death that was related to a delay in diagnosis by the ER physician (details below). That man had signs of an aortic dissection (a tear in the major vessel that delivers blood from the heart) and for reasons we don’t know, the diagnosis was delayed for 7 hours.

This Canadian study is quite reasonable. But the way it was used in the AHRQ report was not.

The goal of the Canadian study was not to estimate the rate of misdiagnosis-related death in their EDs (and especially not in all the EDs across the US). They were looking at overall harms, and didn’t have a large enough sample size to estimate how often misdiagnosis leads to death.

This didn’t stop the AHRQ from misusing this single death to estimate a death rate across the entirety of the US. Dividing 1 death by 503 patients, they estimate the death rate to be 0.2%. They then multiple by total ER visits in the US: 130 million visits x 0.2% = ~250k deaths.

I hope I don’t have to tell you how statistically terrible this is. This is not how epidemiology works.

Just change the results you don't like

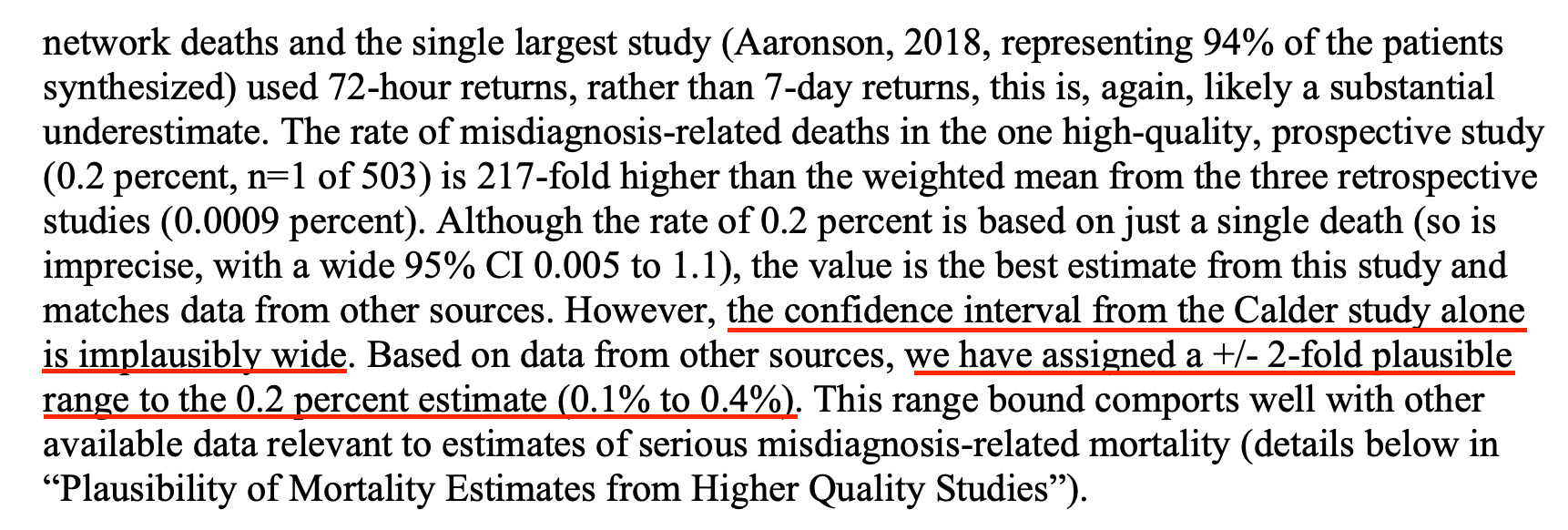

But, there’s more. Whenever estimating rates from data like this, it’s standard to also calculate a confidence interval, which provides a statistical estimate of the uncertainty around your value.

In general, the smaller the sample size, the wider the confidence interval, and the more uncertain you are that your value is the correct one.

They do calculate their confidence interval (death rate could be anywhere from 0.005% to 1.1%), but then decide it is “implausibly wide” and… just guestimate a new one???

You… can’t do this. This is akin to changing up your data simply because they seem wrong to you. You can’t just change statistical parameters. Statistical parameters come from objective analysis of the data, not guestimates from the authors.

They do try to justify their guestimated confidence interval by citing other sources. But, not shockingly, these other sources don’t hold up either.

ER docs are not clairvoyant

First, they reference a study of medicare data saying that for people older than 65 without a terminal illness who are discharged from the ED with a non-life threatening diagnosis, 0.12% of them die within 7 days (allegedly dying from ER misdiagnosis??)

They argue that number (0.12%) is inside their guestimated confidence interval, (0.1%-0.4%), providing evidence their confidence interval is right.

But there are, of course, problems with this. If you look at the study they cited, many of these deaths were not misdiagnoses. The ER diagnosis matched the death diagnosis for a significant chunk of them.

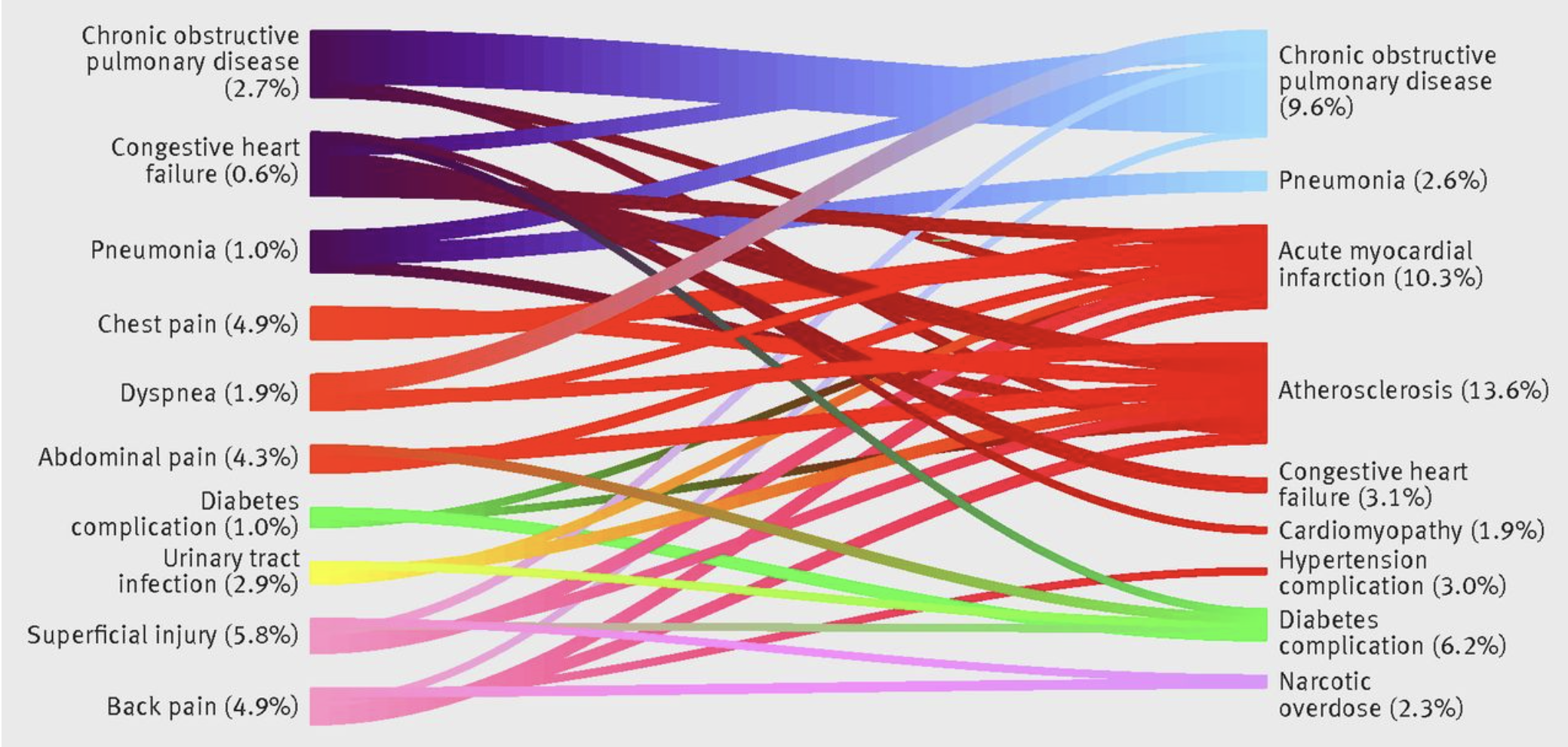

For those patients whose ER diagnosis matched their death diagnosis, does that mean the ER doc didn’t do enough to help them? Possibly. But ER docs don’t have the power to admit everyone. Say someone has a mild COPD exacerbation, responds to medicines given in the ER, and is feeling better, back to normal. It is very reasonable to send that person home (and the hospitalist would probably push back if the ER doc even tried to admit them). That doesn’t mean the ER doc can guarantee the patient won’t have another serious COPD exacerbation 4 days later.

For those patients whose ER diagnoses did not match their death diagnosis, it’s not fair to assume the ER doc just “missed it” — It’s not reasonable to expect ER docs to predict, in advance, every future illness their patients are going to have. Barring possession of a time machine, that is an impossible task.

In short, this medicare data is not evidence of ER docs missing diagnoses. If you say it is, that puts a standard on ER docs that is impossible to meet (they must predict every death from any disease in the near future.)

Keep some studies, throw out others

This section has been updated 12/22/22.

Next, they try to support their guesstimated confidence interval by averaging results from two studies: one from Switzerland that looked at admitted patients, and one that looked at discharged patients in Spain.

The study in Spain looked at patients discharged from the ER who returned 3 days later, and found that 3 of them ended up dying. (They had a control group, but it wasn’t great.)

When I originally read this paper, it wasn’t immediately clear whether these deaths were due to diagnostic error, so one of my originally published criticisms was the authors assumed it was diagnostic error when that wasn’t necessarily the case. I have re-read the paper and I think it’s a reasonable interpretation of the text that these deaths were due to diagnostic error (the language is not as explicit as I would have liked, but I think it’s fair to say its implied), so I retract that criticism.

Regardless, this study has the same flaws as the Canadian study — it wasn’t designed to asses the overall rate of ER misdiagnosis-related death, and the sample size is far too small (3 deaths) to infer the rate of death, just like it is was for the Canadian study.

But perhaps more importantly, this Spanish study has a similar study design (looking at people who returned to the ER after 3 days) as three other studies that the AHRQ report ignored in their calculations, all three of which report VASTLY lower rates of death associated with ER misdiagnosis…

How did the AHRQ report justify ignoring these three studies? Because of their… study design. But review of the criticisms of the studies they threw out shows many of those same criticisms apply to this Spanish study, which was included in their calculations. Seems potentially biased to me.

Testing comes with risks and benefits

The study in Switzerland looked at admitted patients and compared the diagnosis the ER doc gave them to the diagnosis listed at discharge (or death). They found that when the diagnosis differed, those patients had longer hospital stays and were more likely to die.

While there are merits to this study, it doesn’t do a dive into the medical records to discern whether the misdiagnosis caused a delay in care that resulted in these deaths. In other words, were these deaths avoidable if the diagnosis had been arrived at earlier?

It’s very possible some deaths could have been avoided, but we don’t have an estimate of how often that happened. Furthermore, sometimes people die from complications they develop in the hospital that weren’t present in the ER, so they are impossible for the ER doc to diagnose.

Finally, one of the main conclusions from this study was that misdiagnosis happened most often when patients’ symptoms were not typical for their disease. Atypical presentations are challenging, and they are something every ER doc strives to avoid missing.

But as Dr. Jeremy Faust points out in his newsletter, the flip side of this issue is being judicious with testing — overtesting can result in harms too.

Every day on shift, we have discussions of who to test and who not to. We hate missing diagnoses, but we also can’t test everyone for everything. Focusing only on misdiagnosis of atypical symptoms, and not considering the harms of over-testing, doesn’t paint a complete picture.

A lot has changed since last century

This section was updated 12/22/22.

Finally, the report attempts to support its guestimated confidence interval by citing a systematic review of misdiagnosis-related deaths, citing a value for the average error rate for a “modern” US-based hospital if it were to autopsy all of its deaths. I didn’t include this study in the original version of this post because there was no citation provided, and my googling did not yield a study that matched these results. In general, if a statistic is given in a report but no citation is provided, it’s pretty much useless, because (as we’ve seen here), it’s very important to be able to go back and see if the study actually supports the claims being made.

Turns out, it was wise not to take this claim at face value. With a little more time on my hands, I dug through the references of this 744 page report and finally found the study this statistic references. It indeed performed a systematic review of autopsy studies looking at diagnostic error. But the “modern” era that statistic is referencing is: the year 2000. Every study used in the systematic review was published last century, ranging from 1953 to 1999. Furthermore, one of the main conclusions of the paper was that the diagnostic error rate is going down with time. Clearly, according to the results of this paper, it is inappropriate to use this estimated value for the year 2000 to infer the death rate in 2022.

Finally, this was not a systematic review of ER misdiagnosis-related death; many of the studies included looked at deaths in the hospital overall, not in the ER. Emergency Medicine as a specialty is relatively young, and the majority of these hospitals likely did not have physicians who completed an Emergency Medicine residency when these studies were conducted. The practice of Emergency Medicine has changed dramatically in the last twenty years — for example, we now can use ultrasound to detect life-threatening diagnoses that were previously much easier to miss. In short, this study is old, and isn’t a reliable estimate for this decade.

In conclusion, the catchy “250,000 people die from misdiagnosis in US ERs” is based on:

- one Canadian study where one person died

With a guesstimated confidence interval based on:

- medicare data that requires ER docs be clairvoyant

- one small Swiss study (33 deaths) that didn’t look at details of medical records (and only included admitted patients)

- one small Spanish study (3 deaths) that wasn’t designed to estimate ER-misdiagnosis death rates

- a systematic review of data from last century (that is not specific to ERs)

Call me underwhelmed.